How much is too much? Navigating the AI privacy debate on personalisation

A 2023 Stanford Digital Economy Lab study found that 76% of consumers appreciate personalised recommendations, yet 68% express concern about how their data is collected and used. Let’s consider why brands opt for AI for hyper-personalisation to stay competitive in modern markets

Recently, there was a creative explosion due to ChatGPT’s new 4o Image Generation update. Every social media feed was flooded with images of friends and family members transformed into Studio Ghibli characters. The viral “Ghiblify” trend represents just the tip of the iceberg in AI; a very distinctive way to position AI’s personalisation capabilities.

However, shouldn’t users pause and consider the broader implications of this data exchange?

More than just fun filters

Let’s just put it there; the appeal is undeniable. Type a simple prompt like "Ghiblify this", and you can transform ordinary photos into artwork reminiscent of beloved Hayao Miyazaki films. Our daily lives are increasingly shaped by AI creativity.

Has the accessibility of this feature democratised creative expression, or was it just a move to gain new users who do not understand AI yet?

Sure, it’s fun, but it often blurs the line between actual AI capabilities and public perception. Do we want that for some people to think, “Oh, it’s just a tool for hyper-personalisation”? Shouldn’t we focus more on integrating its learning and reasoning capabilities into our daily lives?

AI literacy is a huge problem, and the gap between people understanding how to use AI effectively and AI’s rapid growth has created a pool of users who don’t need a forceful nudge—they need education.

Let’s focus on capabilities that extend far beyond filtered character. Even brands were using the trend to climb the leaderboard of popularity. It has enabled businesses and creators to generate marketing materials, product visualisations, and brand assets with remarkable efficiency. However, we should remember that mimicking existing styles is not what we are developing AI for, we want companies to find their unique style.

Study your competitors using AI reasoning and learning capabilities to understand and act independently. Hyper-personalisation is developing unique visual languages that resonate with specific audiences rather than blindly following other approaches and adding to the digital waste.

Beyond Ghibli: Age of hyper-personalisation

A 2023 Stanford Digital Economy Lab study found that 76% of consumers appreciate personalised recommendations, yet 68% express concern about how their data is collected and used. Let’s consider why brands opt for AI for hyper-personalisation to stay competitive in modern markets.

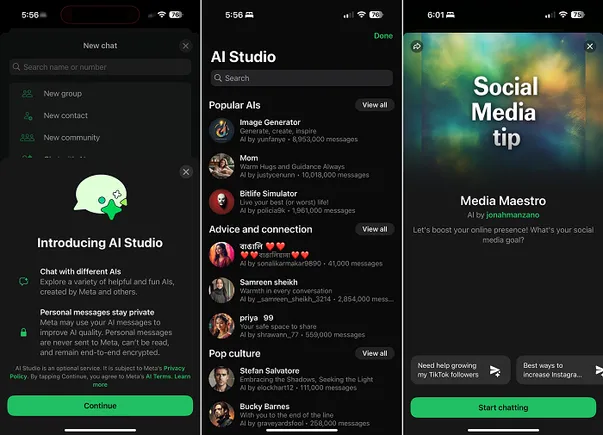

- From email templates to social media posts, AI can generate dynamic content. This requires calibrating individuals' choices, behaviours, and engagement preferences.

- Adjusting shopping experiences in real-time based on browsing behaviour, purchase history, and even emotional state detected through interaction patterns.

- Brands can craft visual content while keeping in mind users’ engagement, content preferences, and browsing history.

Each of these applications represents a valuable innovation while simultaneously raising important privacy questions. So, now we have brands that need to opt for hyper-personalisation—users who want it but lack understanding—and AI that can help to figure out both scenarios.

Finding the balance: Practical steps for privacy-conscious users

Regulations should propose solutions to convey to people how their data is used for hyper-personalisation. On the other hand, the user must be aware of practical strategies to maintain control, such as supporting privacy-first innovations, employing a "privacy budget", and reading privacy policies.

Ethics in personalisation

For creators and businesses leveraging AI personalisation, ethical considerations are paramount. AI products need to develop approaches that respect users’ privacy.

It starts with being transparent about your company utilising users' data to craft a personalised user experience. Second, if the user does not want to share their data, they must provide a clear opt-out preference. Lastly, limit to what is necessary and set boundaries in data collection.

Soon, AI agents will start rolling out in the industry on a large scale. During these upcoming phases, data privacy and ethical guidelines will become increasingly crucial topics of discussion and debate. Companies that successfully navigate this balance will prevail as trusted winners in the marketplace.

Personalise with purpose

We need to navigate this new frontier differently. We must focus on personalisation with purpose. People should be educated about the value exchange in each interaction with AI tools, make conscious choices, and understand more about AI ethics and privacy policies.

In the end, finding a sustainable approach to enhance human creativity does not need to compromise one's fundamental right to privacy. Organisations should treat the approach to finding balance as a fundamental goal for any business. As the AI landscape ventures to build autonomous systems, consumer trust is not the ultimate competitive advantage.

Ronik Patel is the Founder and CEO of Weam.ai

Edited by Suman Singh

(Disclaimer: The views and opinions expressed in this article are those of the author and do not necessarily reflect the views of YourStory.)

![How to Find Low-Competition Keywords with Semrush [Super Easy]](https://static.semrush.com/blog/uploads/media/73/62/7362f16fb9e460b6d58ccc09b4a048b6/how-to-find-low-competition-keywords-sm.png)