Meta ‘stole’ my book to train its AI – but there’s a bigger problem

Tech companies are using books to feed AI without consent. But the problem goes beyond copyright – it’s about creativity, value, and ownership.

A shadow library might sound like something from a fantasy novel – but it’s real, and far more troubling.

It’s an online archive of pirated books, academic papers, and other people’s work, taken without permission. These libraries have always been controversial. But in the AI world, they’re an open secret – rich sources of high-quality writing used to train large language models.

The books in them are goldmines because they're long-form, emotional, diverse and generally well-written. Using them to ‘train’ AI is a shortcut to teaching these tools how humans think, feel, and express themselves. But licensing them properly would be expensive and messy. So tech companies just didn’t bother.

This quiet exploitation exploded into public view in March 2025 when The Atlantic released a tool that lets anyone search for their books in LibGen (Library Genesis), one of the biggest shadow libraries.

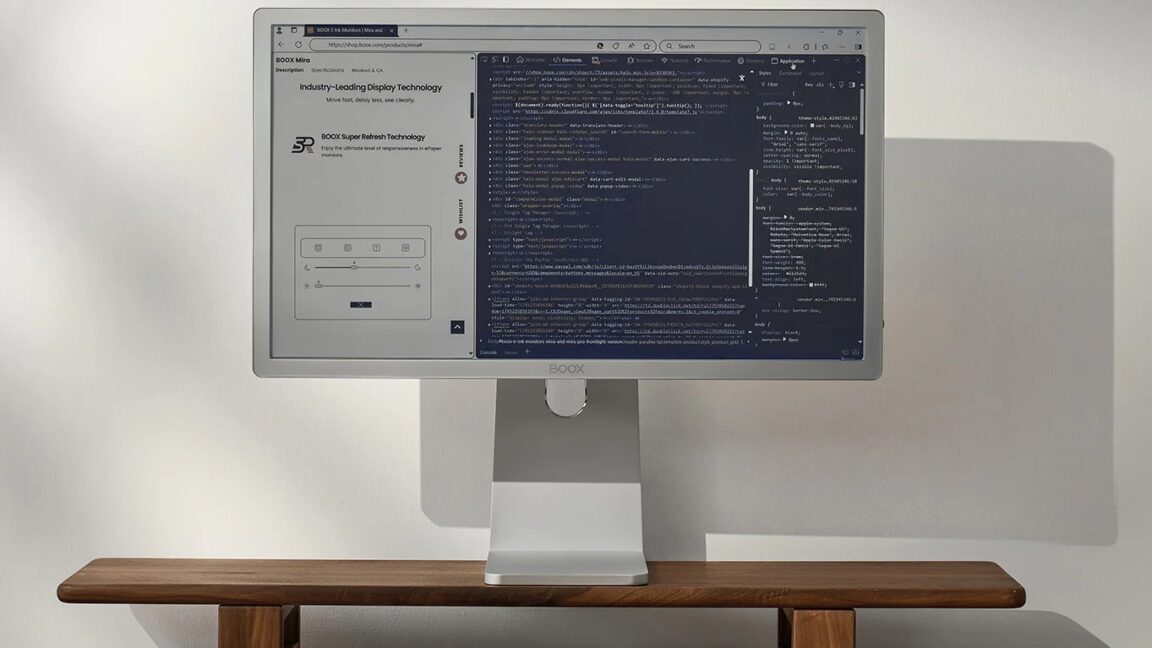

And there it was, my book Screen Time, along with millions of others.

It's been revealed in legal documents that Meta, the parent company of Facebook and Instagram, used LibGen to train its large language models, including LLaMA 3. Not every title was necessarily used, but the possibility alone is enough to leave authors reeling.

As a tech journalist, I’ve always tried to stay level-headed about AI – curious but critical. But when it’s your book that’s been ‘stolen’ to train AI, it hits differently. You think about the hours, the edits, the emotion. The despair and euphoria of creating something from nothing.

It feels as if all of that has been swallowed whole by a system that mimics creativity while erasing the creator. The outrage from authors is real – the lack of consent, the lack of compensation. But what haunts me is something deeper, a grief for creativity itself, and the sense it's slipping away.

Fair use or foul play?

Meta has been under legal scrutiny about this for some time. In 2023, authors including Richard Kadrey, Sarah Silverman, Andrew Sean Greer, and Junot Díaz took legal action against the company, alleging it used their books without consent to train its large language models.

Meta's defence has been that training its AI models on copyrighted material constitutes what's known as fair use. One of the company's arguments is that the process is transformative, as the AI doesn't reproduce the original works but instead learns patterns from them to generate new content.

Laws in the UK and US differ. In the UK, this is generally considered unlawful unless it falls under specific exceptions like "fair dealing," which has a narrower scope than the US's "fair use." In the US, the legality will hinge on how "fair use" is interpreted, which is currently being tested in ongoing legal disputes. The outcomes will likely set significant precedents for future AI copyright law.

Meta and other AI advocates insist these systems will bring us enormous benefits. Can't the means justify the ends? Personally, I can appreciate that argument. But let's not kid ourselves – Meta's primary motivation is profit. The company is leveraging creative works as raw material to scale its AI capabilities.

Writer and author Lauren Bravo, whose books Probably Nothing, Preloved and What Would the Spice Girls Do? were scraped into LibGen, told me: “I feel furious about my books being on there, for myriad reasons. It's hard enough to make a decent living from writing books these days – the average author's income is £7k! – so to know that a company worth over a trillion dollars felt it was reasonable to use our work without throwing us a few quid is so enraging there aren't even words for it.”

Historian, broadcaster and author Dr Fern Riddell, whose books Death in Ten Minutes, Sex: Lessons From History and The Victorian Guide to Sex were also in the LibGen database, said: “It’s absolutely devastating to see yours – and many others’ – life’s work stolen by a billion-dollar company. This is not the proliferation of ideas. It’s straightforward theft to make Meta money. The scale of it is almost incomprehensible – all my books have been stolen, along with my right to protect my work.”

Like Bravo and Riddell, other authors are understandably angry and confused what this means for the future. The Society of Authors and several other organizations are considering adding to the mounting legal action against Meta. Maybe change will come – new licensing rules, more transparency, opt-in models. But it feels too little, too late.

“Writing a book is a long and deeply personal process for any author. But because mine talks about losing both my parents at a young age – from coping with grief as a teenager to caring for my mum through cancer – it feels extra personal,” writer and corporate content consultant Rochelle Bugg tells me, whose book Handle With Care is also in the dataset. “I poured my heart and soul into my book, so the fact it has been taken, without my knowledge or consent, and used to train AI models that will generate profit for someone else seems totally unjust and completely indefensible.”

There's something uniquely painful about deeply personal work being scraped and repurposed, especially without permission.

The art of being human

These latest incidents have raised all sorts of questions not just about copyright, but about creativity – and how little we seem to value it.

"I fear it's symptomatic of something much larger that's been going on for decades," Lauren Bravo tells me. "The way creative work has been dramatically devalued by the internet."

"We've all participated in it," she adds. "In some ways, the democratization of content has been brilliant. But the sinister flipside is that we now expect to consume creative work for free – writing, music, art, even porn."

Generative AI tools take that mindset and dial it up to eleven. Why pay for anything when with a quick prompt you can make it instantly?

Take the viral AI-generated Studio Ghibli trend. It looks charming until you remember that Ghibli founder Hayao Miyazaki has publicly condemned it. Yes, AI can gobble up his art and copy its style anyway. But is that kind of mimicry creativity? Is it still art if it's made without permission, or without the human experience that shaped it?

Some of us obsess over these questions. But honestly? It's starting to look like few others care. Tech companies mine data. Users get the dopamine hit of jumping on a new trend. Everyone keeps scrolling.

Author Philip Ellis, whose books We Could Be Heroes and Love & Other Scams were scraped into LibGen, told me: "I see artists online trying to educate their followers about AI's environmental impact. But as the action figure trend has shown, your average person is still ignorant – maybe wilfully – of how bad generative AI is proving to be. Not just for the climate, but for culture."

Sam Altman, CEO of OpenAI (the company behind ChatGPT), takes an unsurprisingly optimistic view. In a recent TED 2025 interview, he claimed generative AI can democratize creativity.

He acknowledges the ethical complexities – copying styles, lack of consent – and has floated ideas like opt-in revenue sharing. But even he admits that attribution, consent, and fair pay are still "big questions."

OpenAI blocks users from mimicking living artists directly, but broader genre imitation is still allowed. Altman insists that every leap in creative tech has led to "better output." But who decides what's "better"? And who benefits?

The danger next is that young creators might see this landscape and wonder if creating is still worth it.

"I'm scared that we'll lose a future generation of painters, authors, musicians," Ellis tells me. "That they won't feel the thrill of discovery. The joy of putting hours into a creative pursuit for its own sake. Because companies like Meta have told them a machine can do the hard part – as if the hard part isn't the whole point."

What we lose when we turn to AI to "create" for us isn’t just jobs or royalties. We also lose the messy, magical process that gives art its meaning. Creators aren’t prompt-fed machines. They're emotional, chaotic and alive. Every poem, novel, song or sketch is shaped by memory, trauma, boredom, desire. That’s what we connect to isn't it? Not polish, but meaning and soul.

As Ellis told me: "Even if I'm never published again, I'll still carry on writing. Because the act itself – of crafting characters and worlds that seem to exist almost independently of me – is what makes me happy."

AI can certainly produce something that resembles art. Sometimes it's clever. Sometimes it's even beautiful. But the AI tool you use doesn’t feel or care or know why it exists. Instead, we know it works by interpreting a prompt then borrowing, blending, remixing and regurgitating. So we have to ask whether what AI creates is still creativity in the absence of a human creator?

It’s the kind of question that keeps me up at night. And maybe ultimately it no longer matters. Maybe the very idea of creativity is being rewritten. Tech giants certainly promise us bold new forms of expression through AI. And many people are clearly excited by that prospect. But let’s at least be honest, these systems weren’t built to nurture our creativity. They were built to monetize it.

This isn’t just about my book, or even the 7.5 million others in LibGen. It’s about what we choose to value, like art, culture and the wild and weird richness of human experience. Because the truth is, we're not just training machines. We’re training ourselves to accept a world where our most meaningful expressions become raw material for someone else’s profit. And if we’re not careful, we’ll forget what it ever felt like to make something real.