I sat down with two cooling experts to find out what AI's biggest problem is in the data center

Cooling crisis looms as AI data centers outgrow traditional air systems.

- AI data centers overwhelm air cooling with rising power and heat

- Liquid cooling is becoming essential as server density surges with AI growth

- New hybrid cooling cuts power and water but faces adoption hesitance

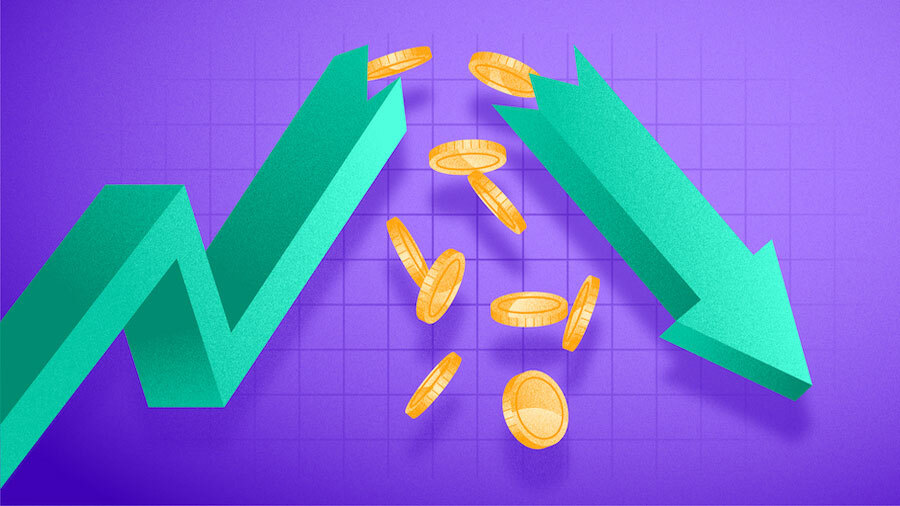

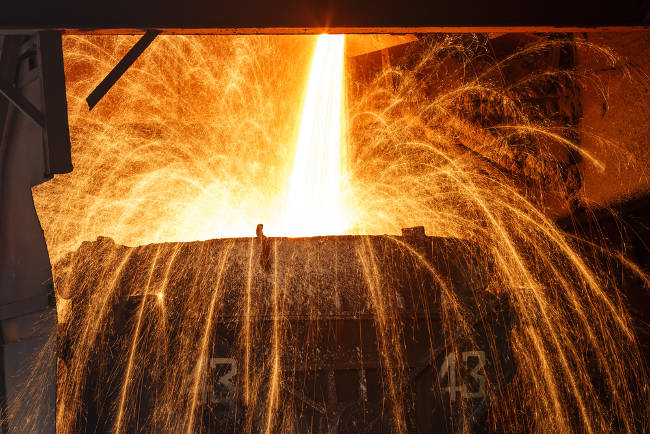

As AI transforms everything from search engines to logistics, its hidden costs are becoming harder and harder to ignore, especially in the data center. The power needed to run generative AI is pushing infrastructure beyond what traditional air cooling can handle.

To explore the scale of the challenge, I spoke with Daren Shumate, founder of Shumate Engineering, and Stephen Spinazzola, the firm’s Director of Mission Critical Services.

With decades of experience building major data centers, they’re now focused on solving AI’s energy and cooling demands. From failing air systems to the promise of new hybrid cooling, they explained why AI is forcing data centers into a new era.

What are the biggest challenges in cooling a data center?

Stephen Spinazzola: The biggest challenges in cooling data centers are power, water and space. With high-density computing, like the data centers that run artificial intelligence, comes immense heat that cannot be cooled with a conventional air-cooling system.

The typical cabinet loads have doubled and tripled with the deployment of AI. An air-cooling system simply cannot capture the heat generated by the high KW/ cabinet loads generated by AI cabinet clusters.

We have performed computational fluid dynamic (CFD) on numerous data center halls and an air-cooling system shows high temperatures above acceptable levels. The air flows we map with CFD show temperature levels above 115 degrees F. This can result in servers shutting down.

Water cooling can be done in a smaller space with less power, but it requires enormous amount of water. A recent study determined that a single hyper-scaled facility would need 1.5 million liters of water per day to provide cooling and humidification.

These limitations pose great challenges to engineers while planning the new generation of data centers that can support the unprecedented demand we’re seeing for AI.

How is AI changing the norm when it comes to data center heat dissipation?

Stephen Spinazzola: With CFS modeling showing potential servers shutting down with conventional air-cooling within AI cabinet clusters, the need for direct liquid cooling (DLC) is required. AI is typically deployed in 20-30 cabinet clusters at or above 40 KW per cabinet. This represents a fourfold increase in KW/ cabinet with the deployment of AI. The difference is staggering.

A typical Chat-GPT query uses about 10 times more energy than a Google search – and that’s just for a basic generative AI function. More advanced queries require substantially more power that have to go through an AI Cluster Farm to process large-scale computing between multiple machines.

It changes the way we think about power. Consequently, the energy demands are shifting the industry to utilize more liquid-cooling techniques than traditional air cooling.

We talk a lot about cooling, what about delivering actual power?

Daren Shumate: There are two overarching new challenges to deliver power to AI computing: how to move power from UPS output boards to high-density racks, and how to creatively deliver high densities of UPS power from utility.

Power to racks is still accomplished with either branch circuits from distribution PDUs to rack PDUs (plug strips) or with plug-in busway over the racks with the in-rack PDUs plugging into the busway at each rack. The nuance now is what ampacity of busway makes sense with the striping and what is commercially available.

Even with plug-in busway available at an ampacity of 1,200 A, the density of power is forcing the deployment of a larger quantity of separate busway circuits to meet density and the striping requirements. Further complicating power distribution are specific and varying requirement of individual data center end users from branch circuit monitoring or preferences of distribution.

Depending upon site constraints, data center cooling designs can feature medium voltage UPS. Driven by voltage drop concerns, the MV UPS solves concerns for the need to have very large feeder duct banks but also introduces new medium voltage/utilization voltage substations into the program. And when considering medium voltage UPS, another consideration is the applicability of MV rotary UPS systems vs. static MV solutions.

What are the advantages/disadvantages of the various cooling techniques?

Stephen Spinazzola: There are two types of DLC in the market today. Emersion Cooling and cold plate. Emersion Cooling uses large tanks of a non-conducing fluid with the servers positioned vertically and fully emersed in the liquid.

The heat generated by the servers is transferred to the fluid and then transferred to the buildings chilled water system with a closed loop heat exchanger. Emersion tanks take up less space but require servers that are configured for this type of cooling.

Cold-plated cooling uses a heat sink attached to the bottom of the chip stack that transfers the energy from the chip stack to a fluid that is piped throughout the cabinet. The fluid is then piped to an end of row Cooling Distribution Unit (CDU) that transfers the energy to the building chilled water system.

The CDU contains a heat exchanger to transfer energy and 2N pumps on the secondary side of the heat exchanger to ensure continuous fluid flow to the servers. Cold plate cooling is effective at server cooling but it requires a huge amount of fluid pipe connecters that must have disconnect leak stop technology.

Air cooling is proven technique for cooling data centers, which has been around for decades; however, it is inefficient for the high-density racks that are needed to cool AI data centers. As the loads increase, it becomes harder to failure-proof it using CFD modeling.

You're presenting a different cooler, how does it work and what are the current challenges to adoption?

Stephen Spinazzola: Our patent pending Hybrid-Dry/AdiabaticCooling (HDAC) design solution uniquely provides two temperatures of cooling fluid from a single closed loop, allowing for a higher temperature fluid to cool DLC servers and a lower temperature fluid for conventional air cooling.

Because HDAC simultaneously uses 90 percent less water than a chiller-cooling tower system and 50 percent less energy than an air-cooled chiller system, we’ve managed to get the all-important Power Usage Effectiveness (PUE) figure all the way down to about 1.1 annualized for the type of hyperscale data center that is needed to process AI. Typical AI data centers produce a PUE ranging from 1.2 to 1.4.

With the lower PUE, HDAC provides an approximate 12% more usable IT power from the same size utility power sized feed. Both economic and environmental benefits are significant. With a system that provides both an economic and environmental benefit, HDAC requires only “a sip of water”.

The challenge to adoption is simple: nobody wants to go first.

![SWOT Analysis: What It Is & How to Do It [Examples + Template]](https://static.semrush.com/blog/uploads/media/86/6a/866a1270ca091a730ed538d5930e78c2/do-swot-analysis-sm.png)