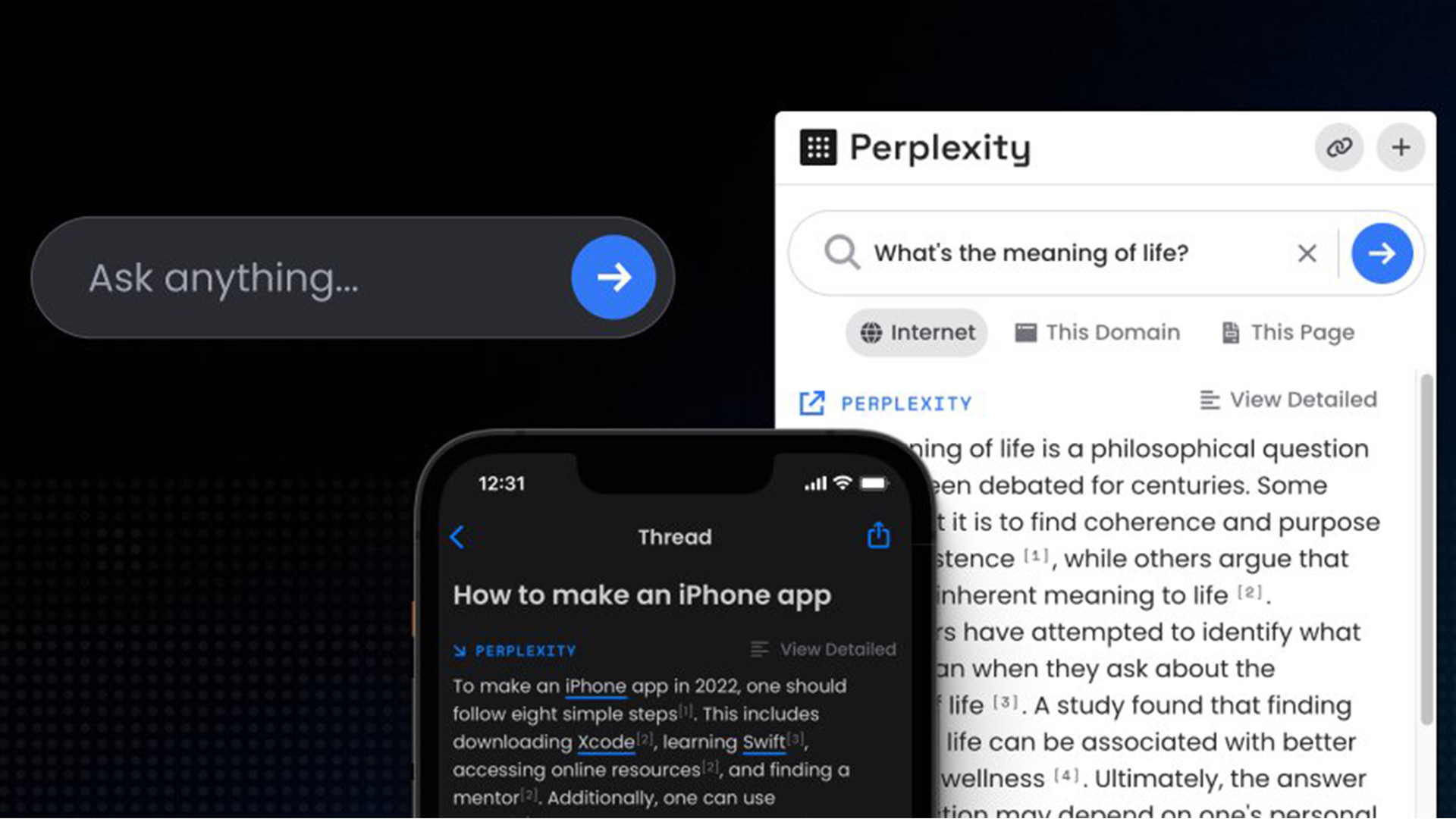

WTF is Retrieval Augmented Generation for AI chatbots and large language models?

Companies can use RAG to provide chatbots with up-to-date information while controlling what information is made available to the large language models powering them.

AI-powered chatbots are scary smart, thanks to the large language models undergirding them. But they’re only as smart as the data they’re trained on.

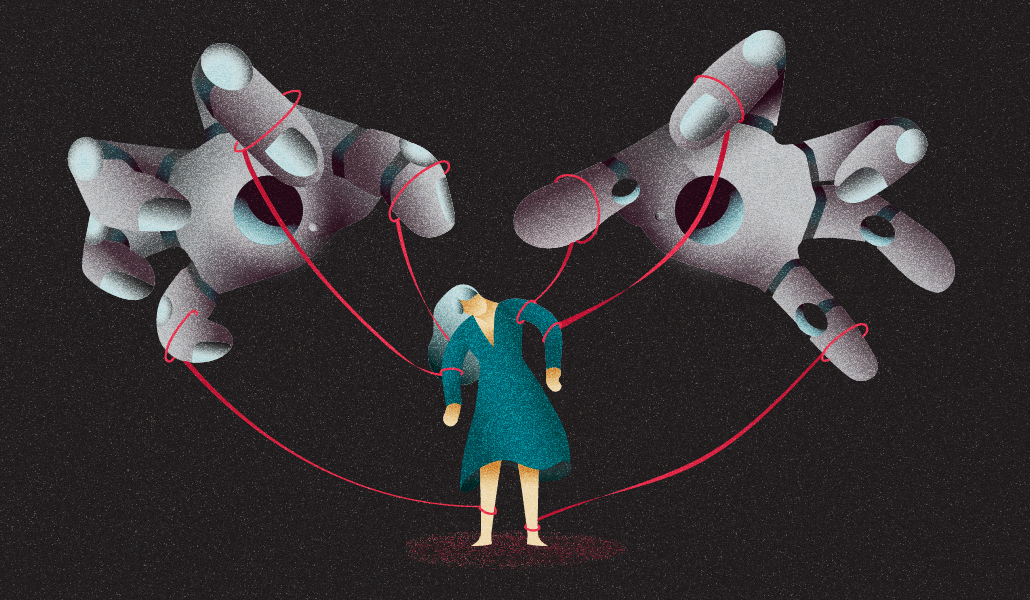

Two major factors can inhibit the AI chatbots being created by brands and publishers: the timeliness of the bots’ training data and the inclusion of proprietary information in that data set. In the first case, a company would need a way to pass information in real time to an LLM powering its chatbot. And in the second case, the company would need to be able to safeguard that information so that the LLM is just provided whatever necessary context to respond someone’s prompt. In both cases, a system called “retrieval augmented generation” would provide a framework for bridging the gap, as explained in the video below.

“There is a difference between being trained on public and licensed data and knowing what sits in the cloud environment of a brand,” said Hugo Loriot, head of data and technology integration at The Brandtech Group. He added, “All of the data that is very specific to a brand, this is off-limits for a [large language] model — unless you have a way to feed that data into the model, which is exactly what RAG does.”

Continue reading this article on digiday.com. Sign up for Digiday newsletters to get the latest on media, marketing and the future of TV.