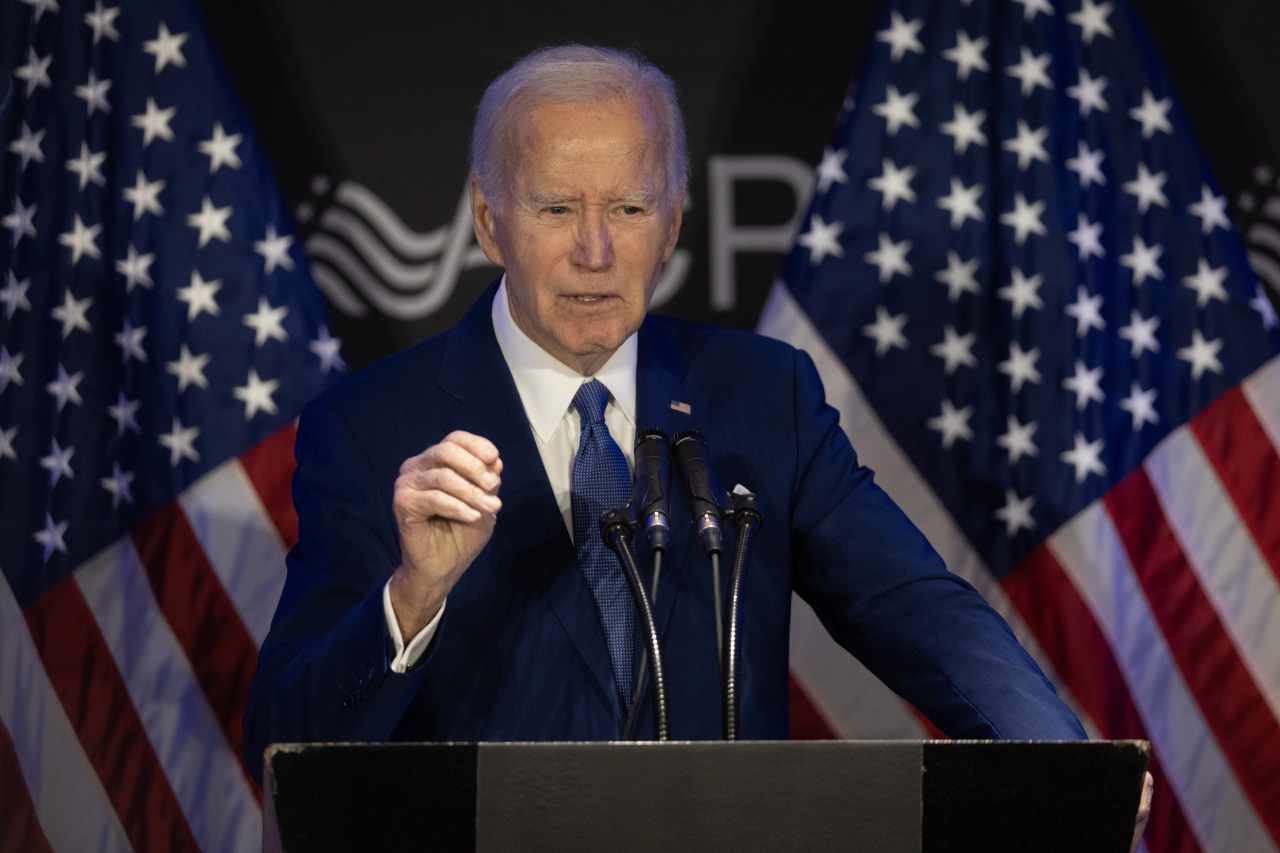

OpenAI can’t afford to ignore Hollywood’s warning

OpenAI’s Hollywood misadventure is reminiscent of an earlier dispute between the media industry and Silicon Valley.

In February, executives from OpenAI visited Los Angeles, hoping to strike deals with major Hollywood studios. They left empty handed. The studios declined partnerships to use Sora, the company’s AI–powered video generation tool, Bloomberg reported, citing concerns over how OpenAI would use their data and potential backlash from unions worried about job losses following the 2023 Hollywood strikes.

OpenAI’s failure to win over Hollywood exposes a deeper issue for the company: It seems unwilling to prove it can work within the contracts, licensing agreements, and labor protections that have governed the entertainment business for more than a century. OpenAI isn’t just alienating the $100 billion-plus entertainment business—it is missing an opportunity to show other industries that it’s capable of building viable, long-term partnerships.

OpenAI’s Hollywood misadventure is reminiscent of an earlier dispute between the media industry and Silicon Valley. In the early 2000s, Napster seemed poised to upend the music industry by offering users an unprecedented digital catalog of songs. The service became a cultural phenomenon, but it was predicated on distributing unlicensed (stolen) music, and the company’s refusal to engage with copyright law proved costly in the long run. Major labels sued, and by the time Napster realized it was better off negotiating licensing deals, it was too late. The music industry had moved on, opting for sustainable agreements with services such as Rhapsody, iTunes, and eventually Spotify. OpenAI’s technology is far more transformative than Napster’s, but its story could look the same.

Like Napster, OpenAI fails to see that working with established industries is the better path to long-term growth. Instead of engaging with creators, OpenAI has scraped news articles, ingested entire libraries of books without securing rights, and launched a voice assistant that sounded a lot like Scarlett Johansson (who had previously denied permission). Amid criticism around the Johansson case, OpenAI claimed the voice belonged to a different unnamed actress and carried on. That strategy—move fast, ask for permission later—may have worked so far to make OpenAI the dominant player in generative AI, but it’s not sustainable.

Studio-friendly AI

As Napster did, OpenAI is opening the door for the film industry to partner with AI companies that respect its intellectual property. Lionsgate announced a partnership with Runway to build a proprietary AI model trained exclusively on the studio’s catalog. The resulting model will be fully transparent and controlled—Lionsgate knows exactly what IP is being used and can distribute revenue internally or reinvest it. Similarly, James Cameron has teamed with StabilityAI to bring AI to special effects, and veteran film executive Peter Chernin joined with Andreessen Horowitz to launch an AI-native studio, Promise.

These ventures differ from OpenAI in that they are either training models exclusively on licensed data, using AI in narrow, artist-controlled pipelines or building new studios with Hollywood’s direct involvement. By insisting on control and not acknowledging filmmakers’ concerns, OpenAI may eventually find itself on the outside looking in.

OpenAI should learn from other tech companies that once saw regulation as an obstacle but later realized the benefits of cooperation—often after bruising fights with regulators. For example, Uber touted itself as a “disruptor,” yet it eventually worked with city governments including London and Washington, D.C. to secure municipal contracts and bolster market trust.

OpenAI still has time to convince legacy industries that it can respect intellectual property rights, data privacy, and labor rules.

As a first step, OpenAI should offer more transparency around its AI training data, helping studios and unions understand what copyrighted material is being used. A content provenance system that traces AI-generated outputs like scripts or performances would not require the model to be fully disclosed. OpenAI, studios, and creators could rely on third-party audits to certify that the models were developed with agreed-upon data restrictions and standards. This can be done while still protecting proprietary information.

OpenAI should also agree to share revenue with rights holders in some way. A Spotify-style royalty system may not be replicable for film, but the core idea of a creative fund is still viable—especially in controlled cases like Runway’s deal with Lionsgate. The idea isn’t to pay per use, but to license pre-approved datasets and share revenue tied to the videos derived from that content.

OpenAI in Hollywood

There’s a real opportunity in bundling data: OpenAI could adopt a similar model using licensed bundles of copyrighted content, developed in partnership with unions and individual studios. Even a limited system would demonstrate a willingness to collaborate. The greatest risk for OpenAI isn’t in getting the details wrong—it’s in doing nothing while competitors move ahead.

In this vein, it’s in OpenAI’s best interest to engage more broadly with Hollywood—not just studios, but labor and creators. The 2023 strikes showed that unions shape public narratives and policy, and any long-term strategy must reflect that. Funneling a portion of AI-driven revenue to industry professionals would signal an intent to work with, not around, human talent. This kind of initiative could reframe AI as a creative partner, not a threat, and help OpenAI stand apart from opaque general-purpose models.

Last month, more than 400 Hollywood creatives sent a letter to the White House, arguing that AI companies should follow copyright law like any other industry: “There is no reason to weaken or eliminate the copyright protections that have helped America flourish,” the letter said. The longer OpenAI waits to act, the more it opens the door for others to do so first.

The opinions expressed in Fortune.com commentary pieces are solely the views of their authors and do not necessarily reflect the opinions and beliefs of Fortune.

This story was originally featured on Fortune.com

![How to Find Low-Competition Keywords with Semrush [Super Easy]](https://static.semrush.com/blog/uploads/media/73/62/7362f16fb9e460b6d58ccc09b4a048b6/how-to-find-low-competition-keywords-sm.png)