Google’s latest AI model is missing a key safety report in apparent violation of promises made to the U.S. government and at international summits

Experts say it is "disappointing" Google and other top AI labs seem to be reneging on commitments to inform the public about the capabilities and risks their AI models present

Google's latest AI model, Gemini 2.5 Pro, was released without a key safety report—a move that critics say violates public commitments the company made to both the U.S. government and at an international summit last year.

Launched in late March, the company has touted the newest Gemini model as the most capable yet, claiming it "leads common benchmarks by meaningful margins."

But Google’s failure to release an accompanying safety research report—also known as a “system card” or “model card”—reneges on previous commitments the company made.

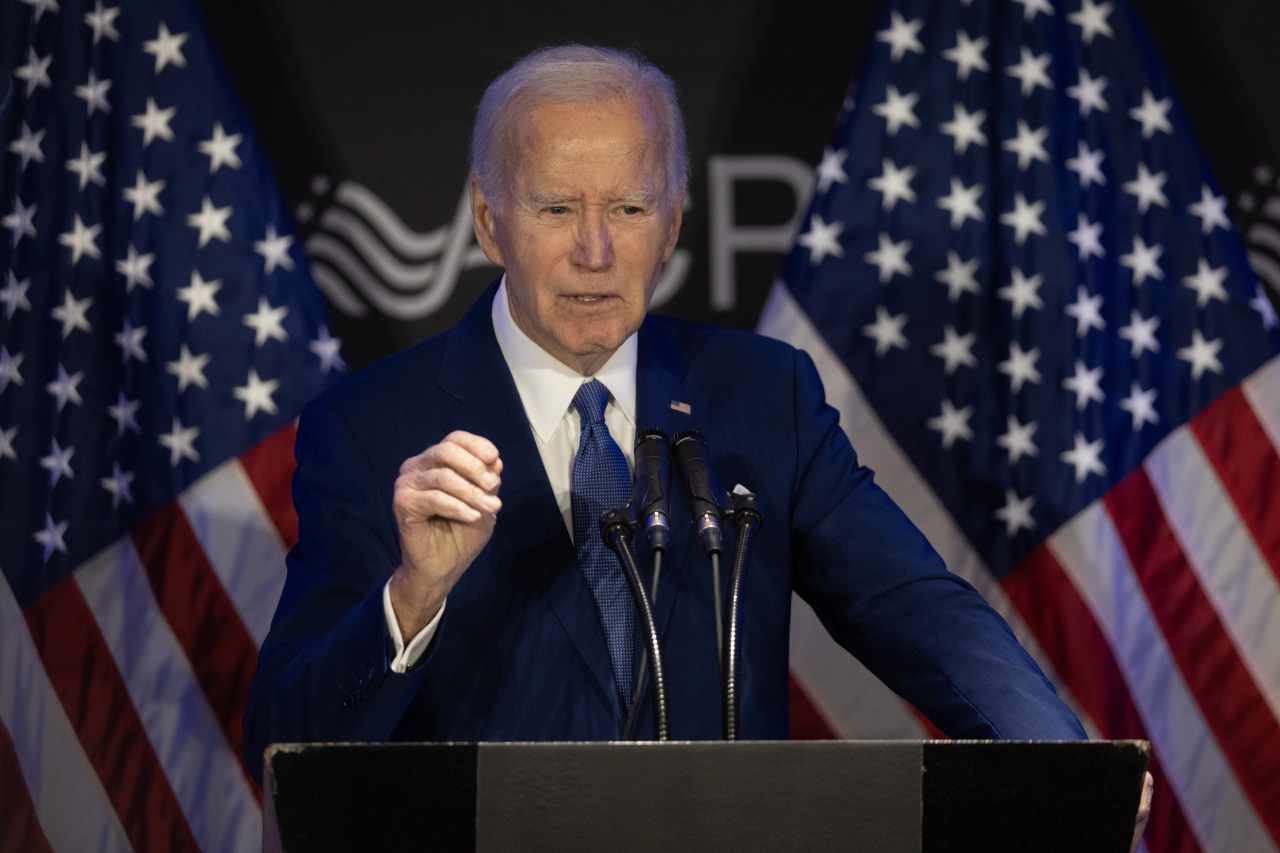

At a July 2023 meeting convened by then-President Joe Biden’s administration at the White House, Google was among a number of leading AI companies that signed a series of commitments, including a pledge to publish reports for all major public model releases more powerful than the current state of the art at the time. Google 2.5 Pro almost certainly would be considered “in scope” of these White House Commitments.

At the time, Google agreed the reports “should include the safety evaluations conducted (including in areas such as dangerous capabilities, to the extent that these are responsible to publicly disclose), significant limitations in performance that have implications for the domains of appropriate use, discussion of the model’s effects on societal risks such as fairness and bias, and the results of adversarial testing conducted to evaluate the model’s fitness for deployment.”

After the G7 meeting in Hiroshima, Japan, in October 2023, Google was among the companies that committed to comply with a voluntary code of conduct on the development of advanced AI. That G7 code includes a commitment to "publicly report advanced AI systems’ capabilities, limitations and domains of appropriate and inappropriate use, to support ensuring sufficient transparency,

thereby contributing to increase accountability."

Then, at an international summit on AI safety held in Seoul, South Korea, in May 2024, the company reiterated similar promises—committing to publicly disclose model capabilities, limitations, appropriate and inappropriate use cases, and to provide transparency around its risk assessments and outcomes.

In an emailed statement, a spokesperson for Google DeepMind, which is the Google division responsible for developing Google's Gemini models, told Fortune that the latest Gemini has undergone “pre-release testing, including internal development evaluations and assurance evaluations which had been conducted before the model was released.” They added that a report with additional safety information and model cards was “forthcoming.” But the spokesperson first issued that statement to Fortune on April 2, and since then no model card has been published.

Tech companies are backsliding on promises, experts fear

Google is not the only one facing scrutiny over its commitment to AI safety. Earlier this year, OpenAI also failed to release a timely model card for its Deep Research model, instead publishing a system card weeks after the project had been initially released. Meta’s recent safety report for Llama 4 has been criticized for its lack of length and detail.

“These failures by some of the major labs to have their safety reporting keep pace with their actual model releases are particularly disappointing considering those same companies voluntarily committed to the U.S. and the world to produce those reports—first in commitments to the Biden administration in 2023 and then through similar pledges in 2024 to comply with the AI code of conduct adopted by the G7 nations at their AI summit in Hiroshima,” Kevin Bankston, an advisor on AI governance at the Center for Democracy and Technology, told Fortune.

Information on government safety evaluations also absent

The Google spokesperson's statement also failed to address questions as to whether Google 2.5 Pro had been submitted to external evaluation by the U.K. AI Security Institute or the U.S. AI Safety Institute.

Google had previously provided earlier generations of its Gemini models to the U.K. AI Safety Institute for evaluation.

At the Seoul Safety Summit, Google signed up to the “Frontier AI Safety Commitments” which included a pledge to “provide public transparency on the implementation” of safety evaluations, except when doing so “would increase risk or divulge sensitive commercial information to a degree disproportionate to the societal benefit.” The pledge said that more detailed information that could not be publicly-shared should still be shared the governments of the countries in which the companies are based, which would be the U.S. in the case of Google.

The companies also committed to “explain how, if at all, external actors, such as governments, civil society, academics, and the public are involved in the process of assessing risks of their AI models.” Google is potentially in violation of that commitment too by not answering direct questions on whether it has submitted Gemini 2.5 Pro to either U.S. or U.K. government evaluators.

Deployment over transparency

The missing safety report has prompted concerns that Google may be favoring rapid deployment over transparency.

“Responsible research and innovation means that you are transparent about the capabilities of the system,” Sandra Wachter, a professor and senior researcher at the Oxford Internet Institute, told Fortune. “If this was a car or a plane, we wouldn't say: let's just bring this to market as quickly as possible and we will look into the safety aspects later. Whereas with generative AI there’s an attitude of putting this out there and worrying, investigating, and fixing the issues with it later.”

Recent political changes along with a growing rivalry between Big Tech companies may be causing some companies to row back on previous safety commitments as they race to deploy AI models.

“The pressure point for these companies of being faster, being quicker, being the first, being the best, being dominant, is more prevalent than it used to be,” Wachter said, adding that safety standards were falling across the industry. These slipping standards could be driven by a growing concern among tech countries and some governments that AI safety procedures are getting in the way of innovation.

In the U.S., the Trump administration has signalled that it plans to approach AI regulation with a significantly lighter touch than the Biden administration. The new government has already rescinded a Biden-era executive order on AI and has been cosying up to tech leaders. At the recent AI summit in Paris, U.S. Vice President JD Vance said that "pro-growth AI policies" should be prioritised over safety and AI was "an opportunity that the Trump administration will not squander.”

At the summit, both the UK and the U.S. refused to sign an international agreement on artificial intelligence that pledged an "open", "inclusive" and "ethical" approach to the technology's development.

“If we can’t count on these companies to fulfill even their most basic safety and transparency commitments when releasing new models—commitments that they themselves voluntarily made—then they are clearly releasing models too quickly in their competitive rush to dominate the field,” Bankston said.

“Especially to the extent AI developers continue to stumble in these commitments, it will be incumbent on lawmakers to develop and enforce clear transparency requirements that the companies can’t shirk,” he added.

This story was originally featured on Fortune.com

![How to Find Low-Competition Keywords with Semrush [Super Easy]](https://static.semrush.com/blog/uploads/media/73/62/7362f16fb9e460b6d58ccc09b4a048b6/how-to-find-low-competition-keywords-sm.png)