OpenAI's Hot New AI Has an Embarrassing Problem

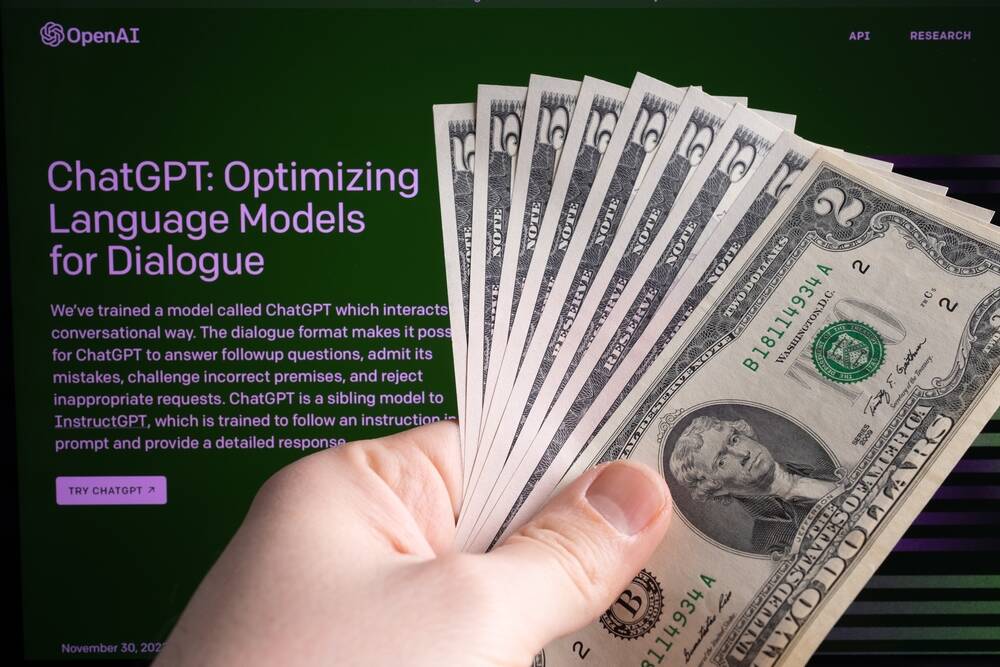

OpenAI launched its latest AI reasoning models, dubbed o3 and o4-mini, last week. According to the Sam Altman-led company, the new models outperform any of its predecessors, and "excel at solving complex math, coding, and scientific challenges while demonstrating strong visual perception and analysis." In practice, however, o3 and o4-mini are a big step back over the models that preceded them, because they tend to make things up — or "hallucinate" — substantially more. According to the

Bucking the Trend

OpenAI launched its latest AI reasoning models, dubbed o3 and o4-mini, last week.

According to the Sam Altman-led company, the new models outperform their predecessors and "excel at solving complex math, coding, and scientific challenges while demonstrating strong visual perception and analysis."

But there's one extremely important area where o3 and o4-mini appear to instead be taking a major step back: they tend to make things up — or "hallucinate" — substantially more than those earlier versions, as TechCrunch reports.

The news once again highlights a nagging technical issue that has plagued the industry for years now. Tech companies have struggled to rein in rampant hallucinations, which have greatly undercut the usefulness of tools like ChatGPT.

Worryingly, OpenAI's two new models also buck a historical trend, which has seen each new model incrementally hallucinating less than the previous one, as TechCrunch points out, suggesting OpenAI is now headed in the wrong direction.

Head in the Clouds

According to OpenAI's own internal testing, o3 and o4-mini tend to hallucinate more than older models, including o1, o1-mini, and even o3-mini, which was released in late January.

Worse yet, the firm doesn't appear to fully understand why. According to its technical report, "more research is needed to understand the cause" of the rampant hallucinations.

Its o3 model scored a hallucination rate of 33 percent on the company's in-house accuracy benchmark, dubbed PersonQA. That's roughly double the rate compared to the company's preceding reasoning models.

Its o4-mini scored an abysmal hallucination rate of 48 percent, part of which could be due to it being a smaller model that has "less world knowledge" and therefore tends to "hallucinate more," according to OpenAI.

Nonprofit AI research company Transluce also found in its own testing that o3 had a strong tendency to hallucinate, especially when generating computer code.

The extent to which it tried to cover for its own shortcomings is baffling.

"It further justifies hallucinated outputs when questioned by the user, even claiming that it uses an external MacBook Pro to perform computations and copies the outputs into ChatGPT," Transluce wrote in its blog post.

Experts even told TechCrunch OpenAI's o3 model hallucinates broken website links that simply don't work when the user tries to click them.

Unsurprisingly, OpenAI is well aware of these shortcomings.

"Addressing hallucinations across all our models is an ongoing area of research, and we’re continually working to improve their accuracy and reliability," OpenAI spokesperson Niko Felix told TechCrunch.

More on OpenAI: OpenAI Is Secretly Building a Social Network

The post OpenAI's Hot New AI Has an Embarrassing Problem appeared first on Futurism.

![A Guide to Reels Ads [Infographic]](https://imgproxy.divecdn.com/wCamrSuvp9Nam-KS-7Pv-nJcB4YAXduCWWHpdZBsVpY/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9yZWVsc19hZHNfaW5mbzIucG5n.webp)

![Which Companies Have Invested the Most into AI Development [Infographic]](https://imgproxy.divecdn.com/qnTgGmUnhhtyx1NChJZ7bBc4fHuHc9BC8NoXo_nBWUE/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9haV9pbnZlc3RtZW50X2luZm8yLnBuZw==.webp)

![31 Top Social Media Platforms in 2025 [+ Marketing Tips]](https://static.semrush.com/blog/uploads/media/0b/40/0b40fe7015c46ea017490203e239364a/most-popular-social-media-platforms.svg)

![How to Find Low-Competition Keywords with Semrush [Super Easy]](https://static.semrush.com/blog/uploads/media/73/62/7362f16fb9e460b6d58ccc09b4a048b6/how-to-find-low-competition-keywords-sm.png)