Google says its AI behemoth is 24x faster than the world's best supercomputer - but this analyst, armed with a spreadsheet, disagrees

Google says its Ironwood TPU is 24x faster than El Capitan, the world's best supercomputer, but an analyst says that’s “perfectly silly”.

- Google claims its Ironwood TPU is 24x faster than the El Capitan supercomputer

- An analyst says Google's performance comparison is “perfectly silly”

- Comparing AI systems and HPC machines is fine, but they serve different purposes

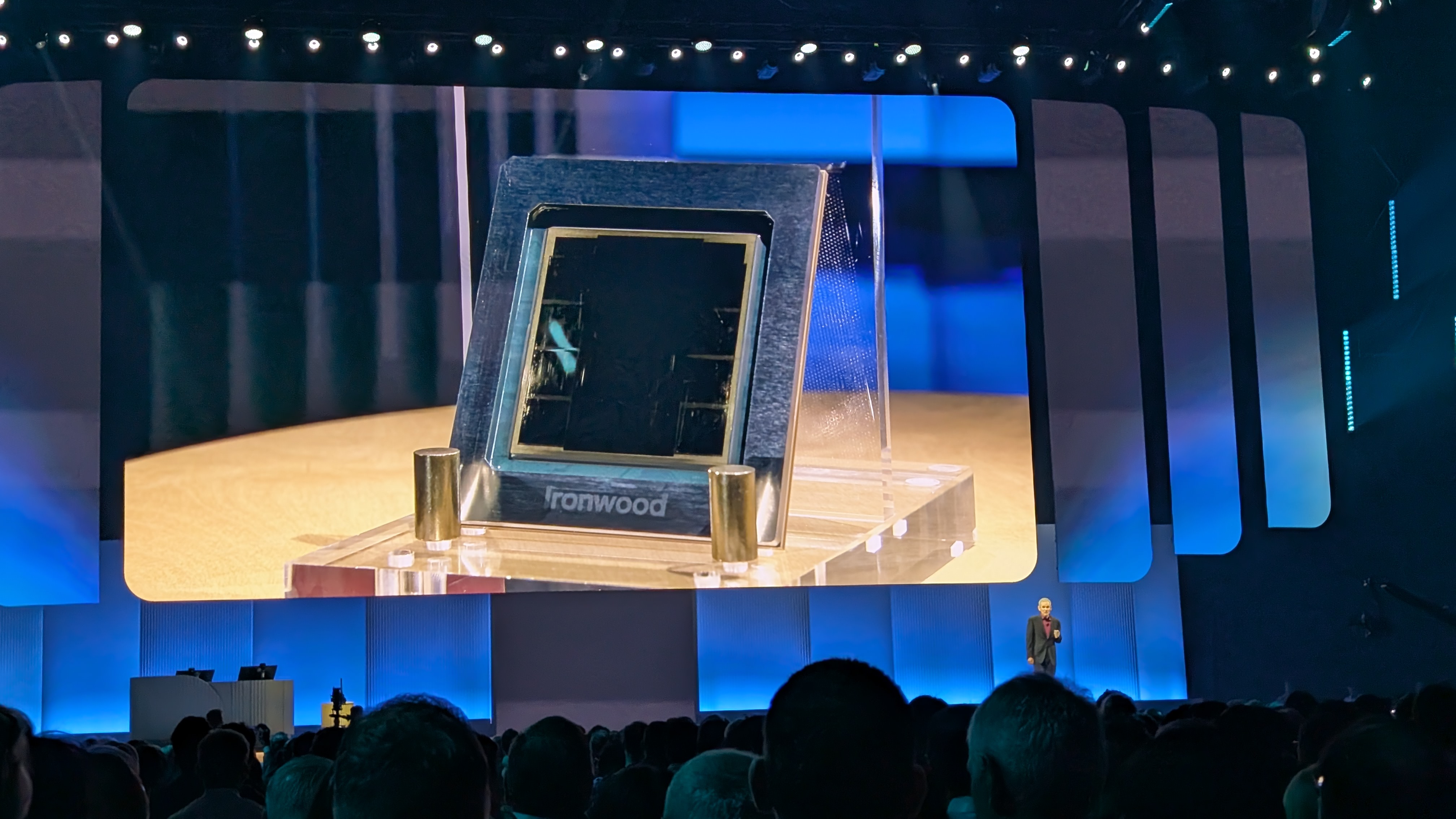

At the recent Google Cloud Next 2025 event, the tech giant claimed that its new Ironwood TPU v7p pod is 24 times faster than El Capitan, the exascale-class supercomputer at Lawrence Livermore National Laboratory.

But Timothy Prickett Morgan of TheNextPlatform has dismissed the claim.

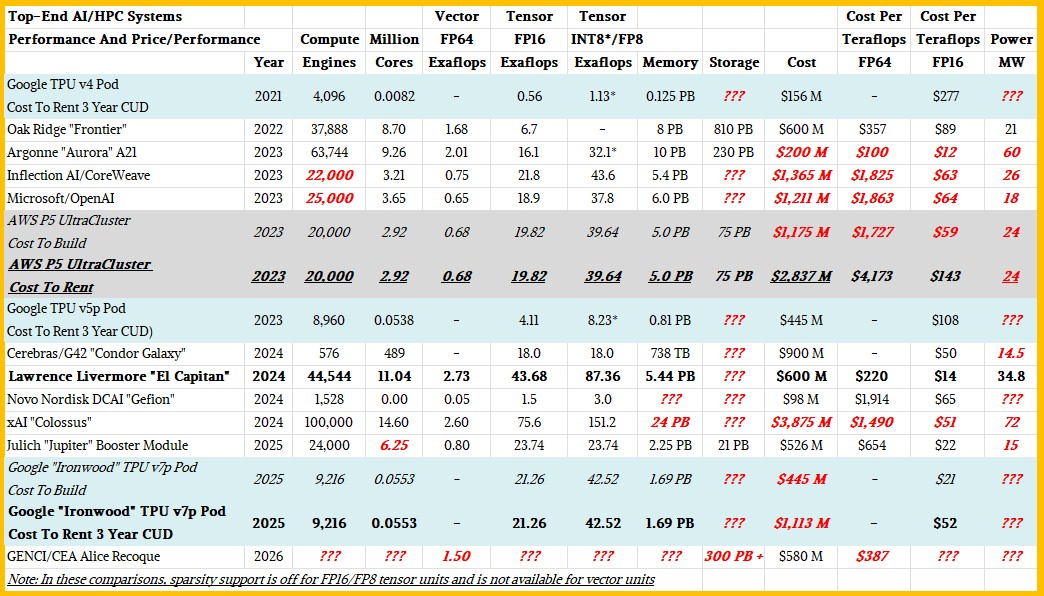

"Google is comparing the sustained performance of El Capitan with 44,544 AMD ‘Antares-A’ Instinct MI300A hybrid CPU-GPU compute engines running the High Performance LINPACK (HPL) benchmark at 64-bit floating point precision against the theoretical peak performance of an Ironwood pod with 9,216 of the TPU v7p compute engines," he wrote. "This is a perfectly silly comparison, and Google’s top brass not only should know better, but does."

24X the performance of El Capitan? Nope!

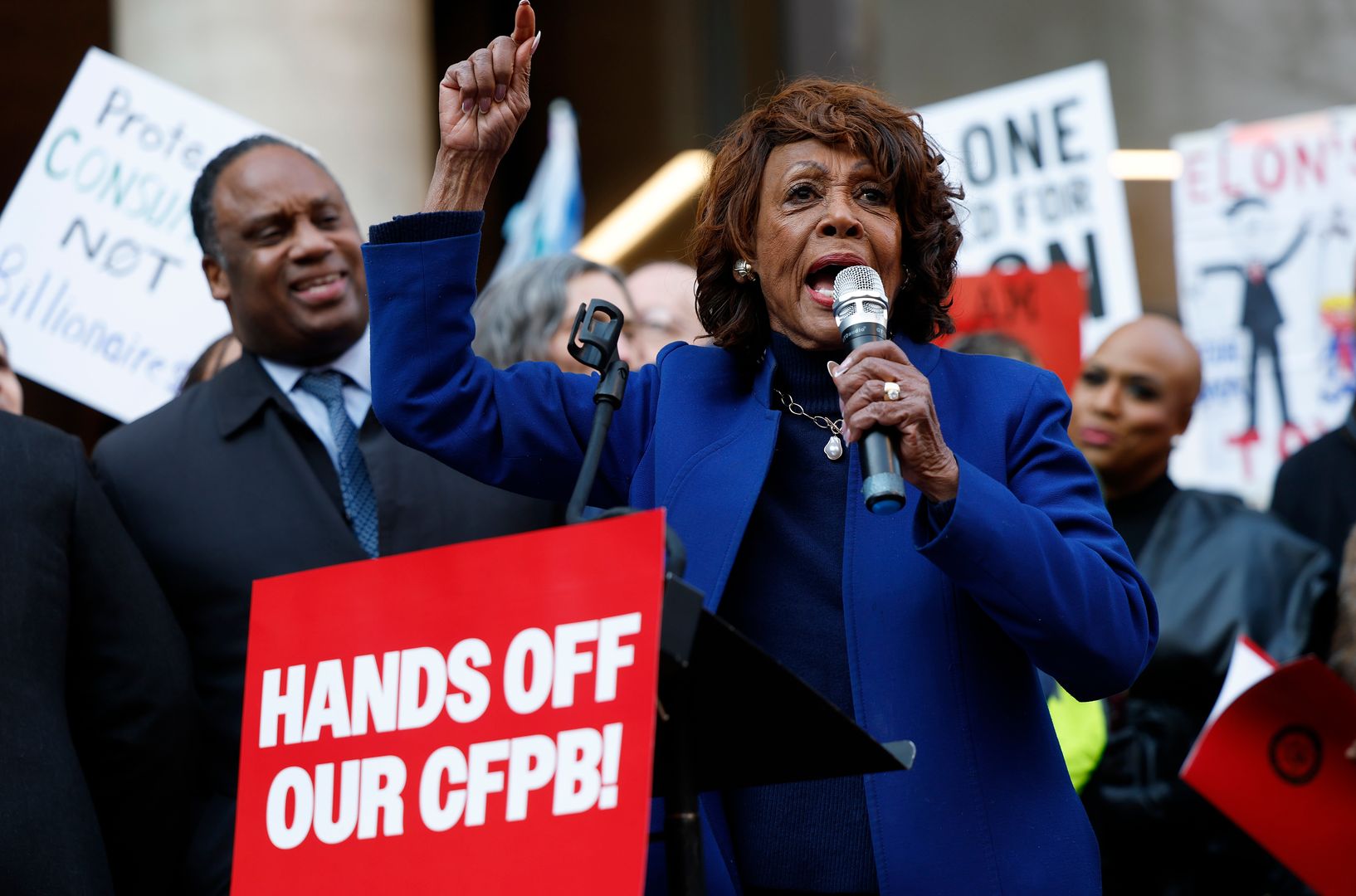

Prickett Morgan argues that while such comparisons are valid between AI systems and HPC machines, the two systems serve different purposes - El Capitan is optimized for high-precision simulations; the Ironwood pod is tailored to low-precision AI inference and training.

What matters, he adds, is not just peak performance but cost. "High performance has to have the lowest cost possible, and no one gets better deals on HPC gear than the US government’s Department of Energy."

Estimates from TheNextPlatform claim the Ironwood pod delivers 21.26 exaflops of FP16 and 42.52 exaflops of FP8 performance, costs $445 million to build and $1.1 billion to rent over three years. That results in a cost per teraflops of $21 (build) or $52 (rental).

Meanwhile, El Capitan delivers 43.68 FP16 exaflops and 87.36 FP8 exaflops at a build cost of $600 million, or $14 per teraflops.

"El Capitan has 2.05X more performance at FP16 and FP8 resolution than an Ironwood pod at peak theoretical performance," Prickett Morgan notes. "The Ironwood pod does not have 24X the performance of El Capitan."

He adds: "HPL-MxP uses a bunch of mixed precision calculations to converge to the same result as all-FP64 math on the HPL test, and these days delivers around an order of magnitude effective performance boost."

The article also includes a comprehensive table (below) comparing top-end AI and HPC systems on performance, memory, storage, and cost-efficiency. While Google’s TPU pods remain competitive, Prickett Morgan maintains that, from a cost/performance standpoint, El Capitan still holds a clear advantage.

"This comparison is not perfect, we realize," he admits. "All estimates are shown in bold red italics, and we have question marks where we are not able to make an estimate at this time."