AI’s infrastructure problem isn’t tariffs, it’s unused capacity

The evolution of AI involves utilizing prolific existing computing power, not building new data megaliths.

The impact that the new US administration’s sweeping global tariffs will have on building out the country’s AI infrastructure is a hot topic. This is not least because US tech giants continue to announce huge budgets for sprawling data centers, with a recent report estimating that Microsoft, Google and Meta plan to spend a combined $325 billion on new data centers.

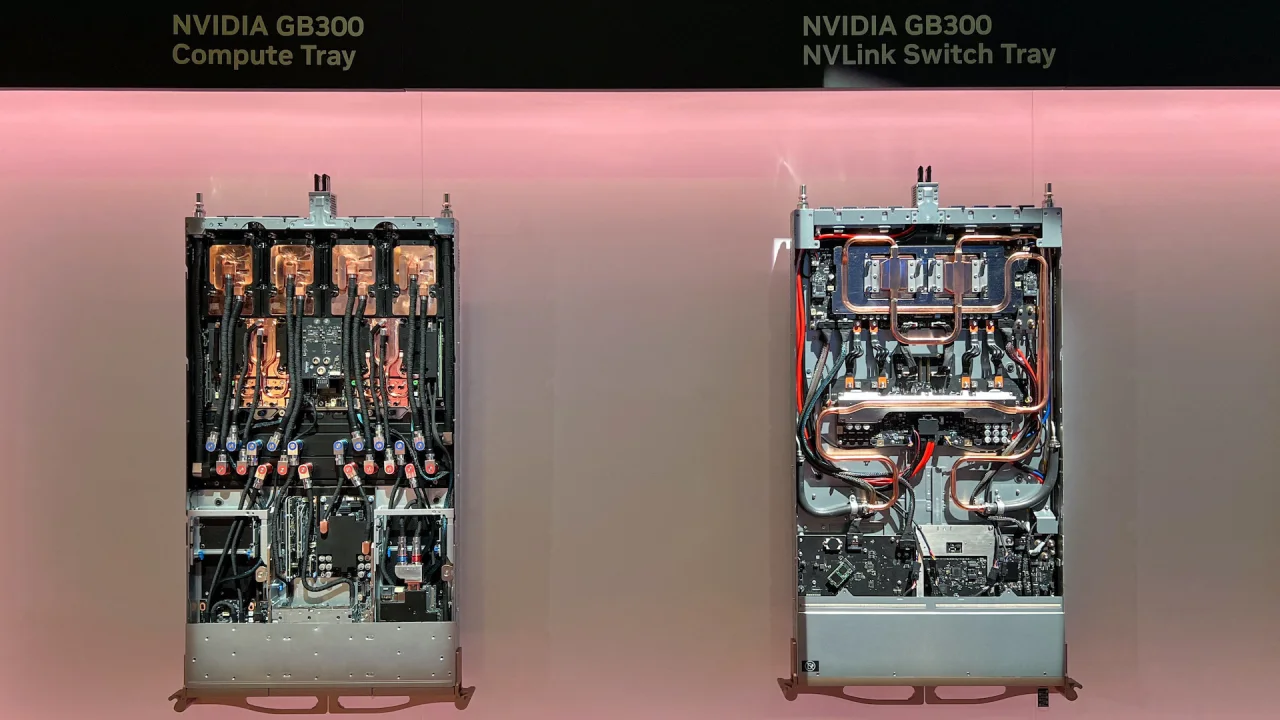

Analysts are focusing mainly on the rising cost of steel, copper, and other precious metals needed to expand physical infrastructure, as well as the specialized semiconductor and GPU chips essential to data centers. By some estimates, construction costs for commercial projects may rise by as much as 5% under the new US global tariff regime.

While these estimates may be correct, the analysis - at least in terms of data centers - is missing a crucial element. Debates over importing steel, GPUs, servers, and networking equipment assume that meeting rising computing demand means we must continuously build. The reality, however, is that we are significantly underutilizing the computing resources we already have.

The resource utilization problem

What we have here is a manufacturing solution being proposed for a distribution problem. Building additional facilities to meet the growing demand for AI computing not only requires enormous capital investment, but this centralized model creates vulnerabilities in terms of single points of failure and malicious actors.

Meanwhile, vast computing resources are sitting idle worldwide. Indeed, we are wasting tremendous computing power. According to the SPECpower benchmark, 20% to 60% of the power consumed by computers connected to the public cloud is idle and so unattributable to active utilization. This means that a significant amount of cloud and GPU computing power is likely being unutilized globally.

In China, the situation is markedly worse. Due to the country’s recent AI infrastructure “gold rush”, billions of dollars were spent on data centers, around 80% of which are now sitting idle, according to a report published by The MIT Technology Review.

This is because the recent release of Chinese AI LLM DeepSeek has revealed a startling truth: AI doesn’t need $30,000 GPU chips with enormous computing power to operate. Indeed, as the release of DeepSeek V3 has just shown, an AI LLM can be run on a Mac computer.

This serves to underline the enormous inefficiencies in global computing power that have persisted for years simply because we have not sought to aggregate and redistribute existing resources. Rather than building entirely new infrastructure, we can tap into reserves of existing computational power. All we need to do is rethink computing infrastructure.

Utilizing existing infrastructure

Harnessing unused computational power is the most efficient way to meet the demands of AI, and Decentralized Physical Infrastructure Networks (DePINs) offer innovative solutions. Rather than building more centralized facilities, DePIN cloud models can connect buyers with suppliers of unused computing power through a globally distributed network that is not reliant on single data centers.

One might think of this as the "Airbnb of Compute" - a way to connect those who need GPU space with those who have GPU space to spare.

This approach, which is able to utilize the world’s idle computing power, has the potential to meet the needs of businesses and individuals without spending billions of dollars on new physical infrastructure.

By taking advantage of underutilized GPU power, cloud computing DePIN platforms can offer high-performance computing at prices significantly lower than established providers like AWS and Azure, and this structural advantage makes tariff concerns largely irrelevant.

Rather than spending billions of dollars on new facilities that will - as China’s dilemma demonstrates - be underutilized, DePIN models maximize existing resources. As DeepSeek has shown, the distribution of computing power can also widen participation in AI tools, enabling small and medium enterprises to leverage machine learning technology without prohibitive costs.

Computing without constraints

Globally, we have come to realize the drawbacks of centralization. In the cloud space, by pivoting from large, centralized data centers, we can build networks that are resilient to trade wars or pandemics. These distributed systems respond to demand naturally – expanding when needed, contracting when not needed – without requiring vast new facilities.

For business leaders and policymakers, this represents an opportunity to rethink assumptions about infrastructure development. Rather than subsidizing more gigantic data centers, leaders could focus policies on incentivizing the better utilization of existing resources.

In the US especially, rather than poring over the potential increase in costs of importing more steel, copper, and computational hardware, the Stargate Infrastructure Project might focus on the vast unused capacity the country already has. And, as cost-cutting is a core focus of this administration, this approach would be fully aligned.

Perhaps of even more interest to such a conservative administration would be the savings in energy costs that such an approach would bring. Distributed computing networks typically consume less energy than large data centers and, more importantly, in a way that does not negatively impact communities and businesses in the area.

Innovation through efficiency

Most importantly, though, distributed computing networks enable faster innovation cycles by lowering barriers to access. They create new economic opportunities for resource providers previously excluded from the AI economy - and innovation and entrepreneurship are nothing if not the raison d'etre of Republican idealism.

Ultimately, current debates around tariffs and their impact on data center construction costs distract from a glaring opportunity. By embracing decentralized computing models, the world can meet growing AI demands without being constrained by infrastructure limitations. The technology exists today, and the economic case is compelling. The only remaining question is how quickly businesses and policymakers will recognize this.

We've featured the best IT infrastructure management service.

This article was produced as part of TechRadarPro's Expert Insights channel where we feature the best and brightest minds in the technology industry today. The views expressed here are those of the author and are not necessarily those of TechRadarPro or Future plc. If you are interested in contributing find out more here: https://www.techradar.com/news/submit-your-story-to-techradar-pro